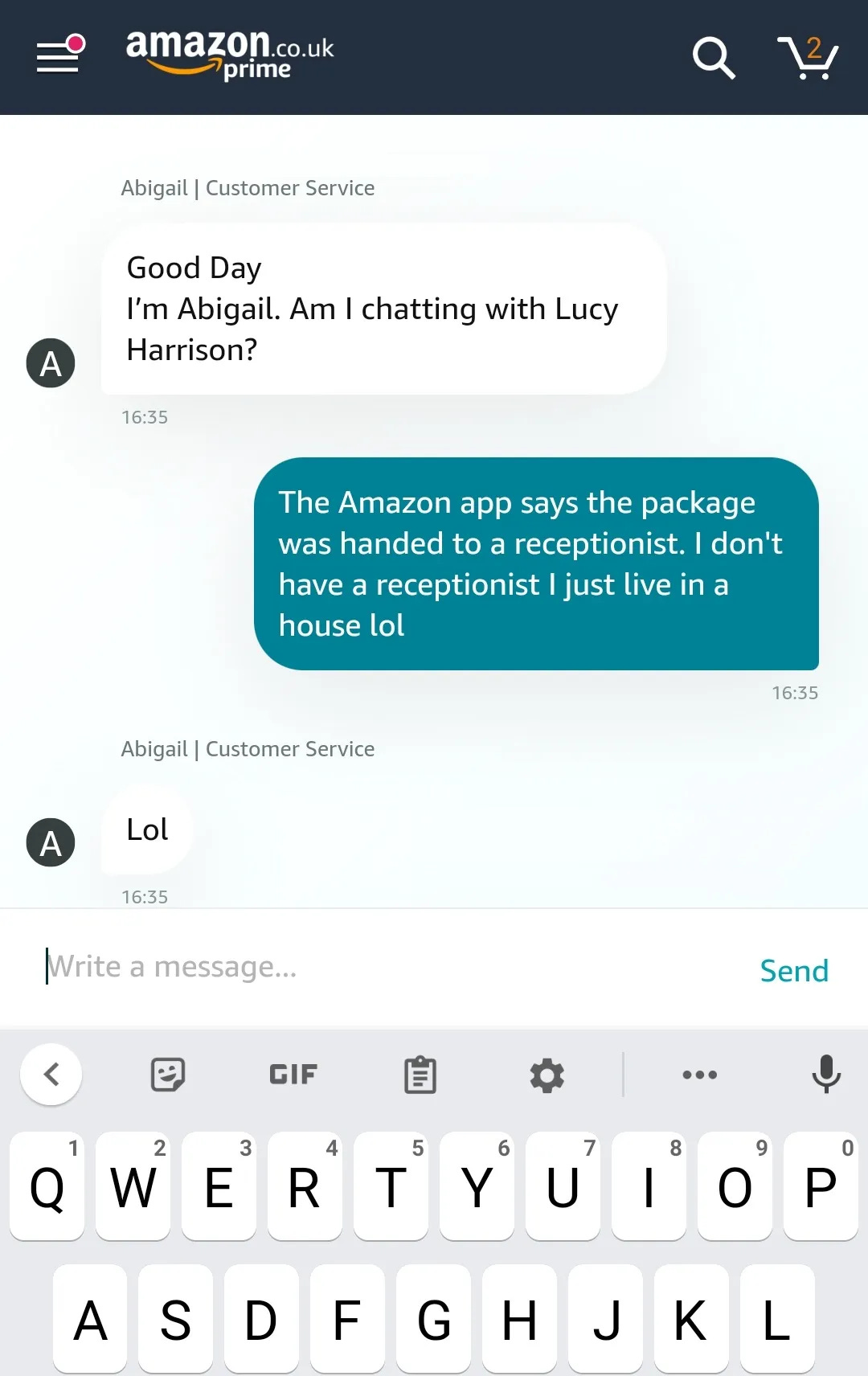

There is no honor fighting with a customer service bot

I want to complain to a real person.

Embedded is your essential guide to what’s good on the internet, written by Kate Lindsay and edited by Nick Catucci.

I’d like to preface this by saying that customer service is a thankless job and those who do it should be honored like the troops. —Kate

New on ICYMI: TikTok is about to get the Twitter treatment.

Head over here to subscribe to ICYMI wherever you listen to podcasts 🫶

I come from a speak-to-the-manager family. I suppose I’m lucky that one of my most traumatic childhood memories is cowering in the corner of a Delia’s as my parents yelled at staff about the price of jeans. I wouldn’t be surprised if my high school administrative office had a special ringtone for my dad.

Today, my sister won’t hesitate to threaten GrubHub with a drone strike over a single loose french fry in the bag. Personally I have tried to break the cycle, and avoid blowing up at 19-year-old retail assistants or remote gig workers in Bangladesh if I can. But that promise is getting harder and harder to keep, as customer service positions are filled not by conscious humans who can be reasoned with, but by bots.

Shortly after Christmas, I got into a fight about a birdfeeder accessory. I’d gifted one to my dad that it turns out he already had. The online store doesn’t have an exchange policy, but the website said in no uncertain terms that I could return the device within 60 days for a refund.

Whatever software the company uses to deal with customers, however, disagreed. I can’t say for certain I was sending my emails to robots, but I’d put money on it. It didn’t matter how many times I quoted or linked to the site, “Sofija” continued to insist that they would not let me return this item, and that I was going to die with this birdfeeder extender still in my possession.

“That’s going to be the future of customer service,” Parker Molloy said on a recent episode of ICYMI. We were talking about Grok, specifically, how it was being used to gaslight users, saying that issues had been resolved when they clearly had not. “It’s already starting and it’s only going to get worse,” Molloy continued. “You’re going to have a chatbot that will tell you whatever you want to hear, but not actually address your problem.”

Recently, I woke up to someone who seemed to be in the midst a mental health crisis outside of my apartment in Brooklyn. I was worried for them as well as for me and my neighbors, but I didn’t want to call the cops, so I looked online for resources instead. I found an NYC.gov chat service that allowed me to check a box saying that I was reporting a mental health crisis for someone else. I described the situation and provided the intersection where this person was located.

I then spent the next 15 minutes trying to convince what seemed to be a chatbot that I was not the one having the crisis, and that I was not going to hurt myself. I was as clear as I could have possibly been, but there was seemingly only one script for this conversation, and it assumed the human reporting the issue was in distress, no matter what boxes were checked or explanations were offered.

“I just want to know the best way to help this person,” I wrote.

“It sounds like there are some things going on that are causing you some anxiety and I want to hear more about that,” “Travis” replied. “Would you mind telling me if you have been experiencing thoughts of suicide over the past few days, including today?” I had to see this conversation to its conclusion, because I didn’t want to abandon the chat and prompt the robot to call 911 on me.

I’m sure the majority of customer service interactions are rote, but outsourcing the job to bots or AI leaves no room for nuance, no understanding of human experience. A customer service rep at Pets Best for instance, not only helped me end my insurance when my cat, Birdie, passed away a few years ago, but also refunded me for the month prior. Chewy sent me a card. These are things only a human who knows what it is to love and lose a pet would think to do.

Now it’s far more likely you’ll get something like the asshole robot at the car rental company I used in the fall to go upstate. The day I was supposed to return the car, that Mexican Navy ship crashed into the Brooklyn Bridge, and traffic coming in and out of the city hit a standstill. I was going to miss my selected return window, and the robot didn’t give a fuck. I tried to explain the boat accident—an unprecedented incident that was beyond my control (and resulted in two deaths)—but it was useless. The chatbot had apparently never been programmed to respond to an old sailing ship drifting into a landmark and snarling traffic, so it granted me no leniency. I was charged a late fee.

When sparring—respectfully, I hope—with a human customer service rep, you have a worthy opponent. They can respond and clarify and compromise based on your situation, even if they’re bounded by corporate rules. There is no honor in battling an customer service bot. They’re the HR of the customer community—there to “help,” but always for the benefit of the company. You might as well yell into a plastic cup.

My brain works off a script, too. When a business that takes my money but is completely unreachable in any real way if the transaction goes wrong, I no longer see it as a business. It is a scam, and I will not be patronizing it. Sorry, that’s just my policy.

oh my god, I know Sofija!! By which I mean to say, I too have argued with that bird feeder company bot, and she really trained me up in how many times you have to explain yourself to a robot. If you ever are tempted to believe the chats can really understand you, talk to Sofija for a while.

Bots also cannot make reasonable adjustments for disabled and neurodivergent people - and in the UK this leaves companies open to legal action- though most of the people who should don’t have the means to 😔